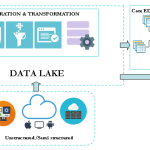

The Enterprise Data Warehouse (EDW) offload is a widespread big data use case. This is certainly because traditional data warehouse and related etl processes are struggling to keep the pace in the big data integration context. Many organisations are looking to integrate new big data sources that come with the following constraints: volume, velocity and […]

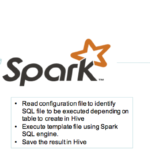

Apache Spark

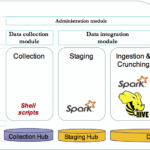

In our previous blog post, we’ve discussed the implementation of a framework built on top of Spark to enable agile and iterative data discovery between legacy systems and new data sources generated by IoT devices (smart city data set). We will now explore in detail, the components of this framework. The framework is composed by […]

In this series of blog posts, we will outline and explain in detail the implementation of a framework built on top of Spark to enable agile and iterative data discovery between legacy systems and new data sources generated by IoT devices. The internet of things (IoT) is certainly bringing new challenges for data practitioners. It’s […]

This post is meant to help you making your first step into data processing with Apache Spark using python API. In the age of Big Data processing, Hadoop map reduce (open source implementation of google map reduce model) has set down the foundation for processing “embarrassingly parallel” operations on distributed machines. Sadly, it shows programmability limitations and degradation in […]